|

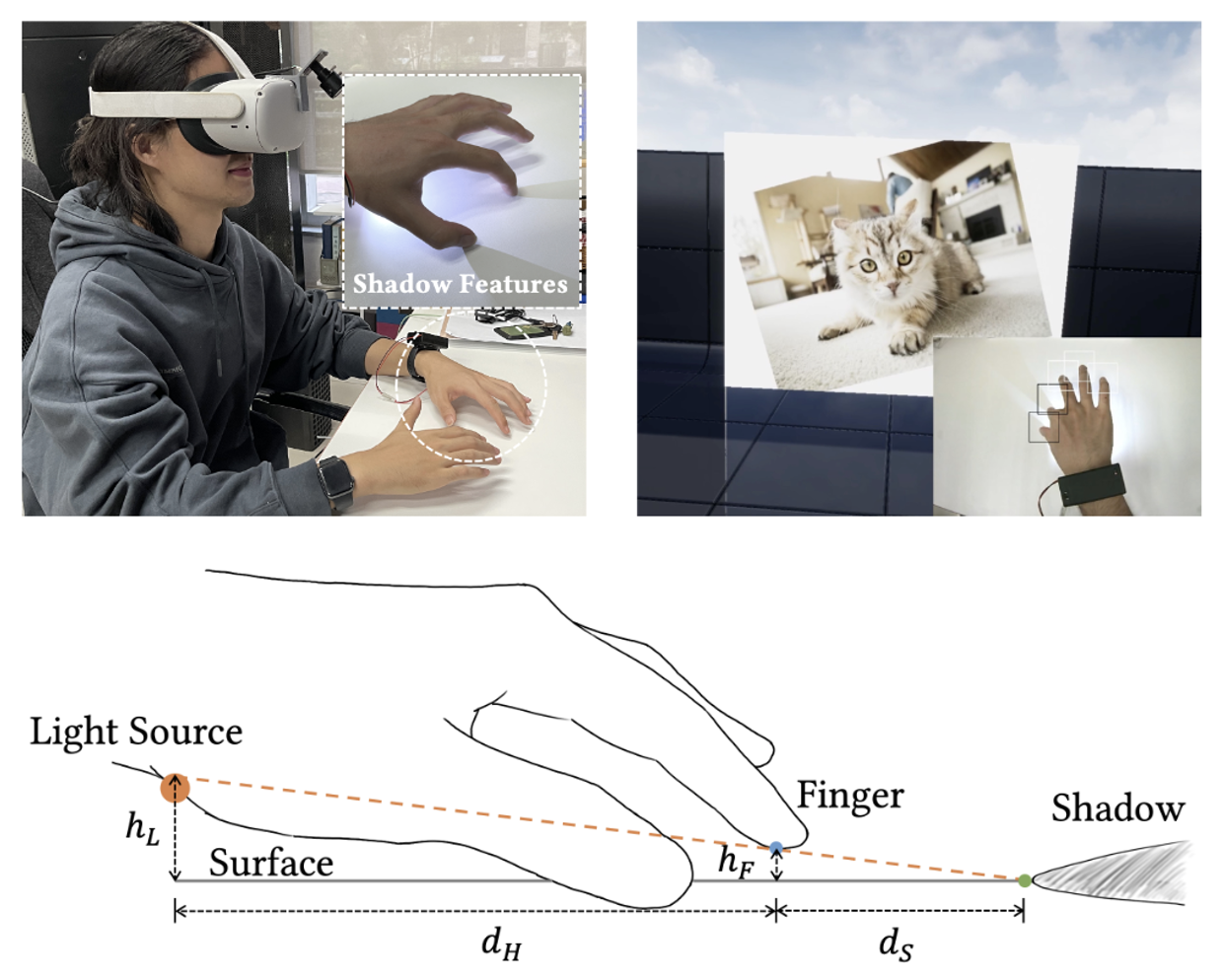

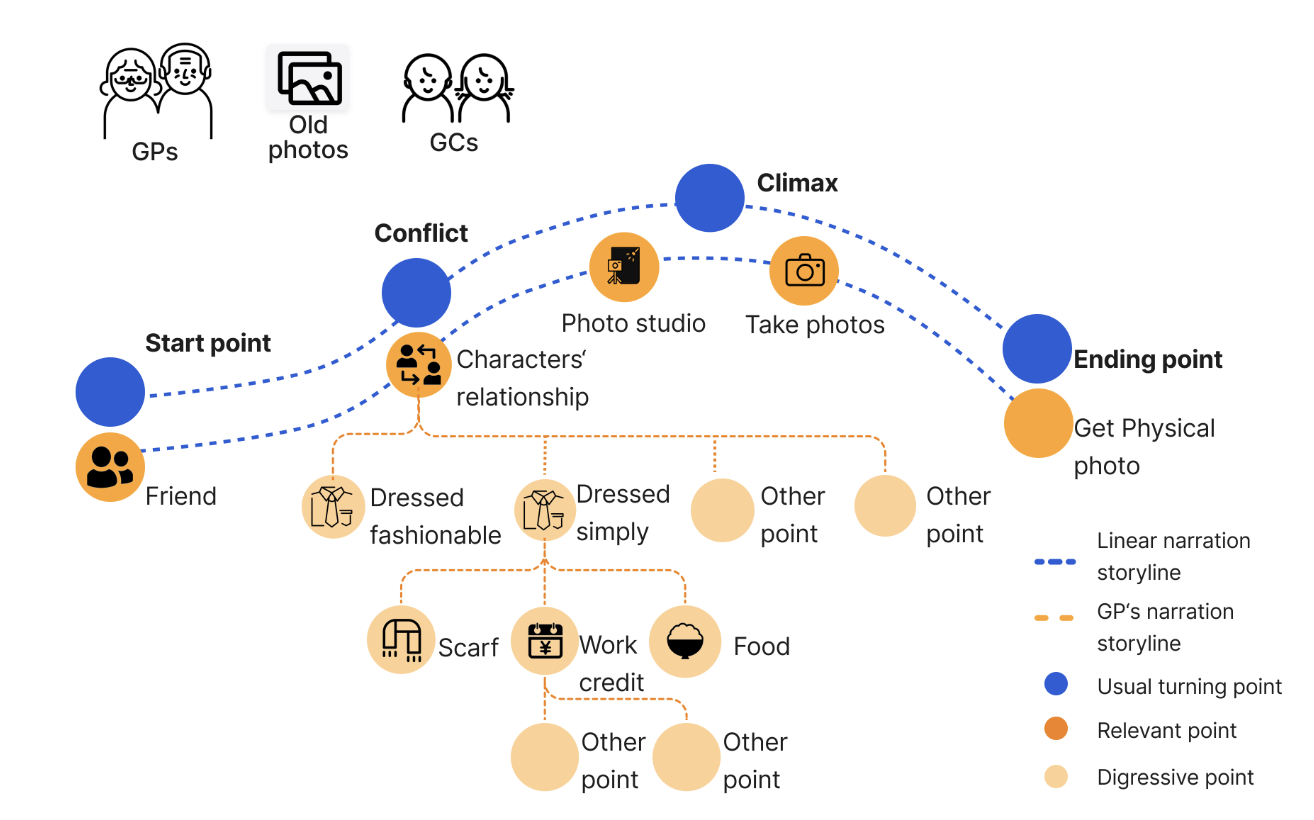

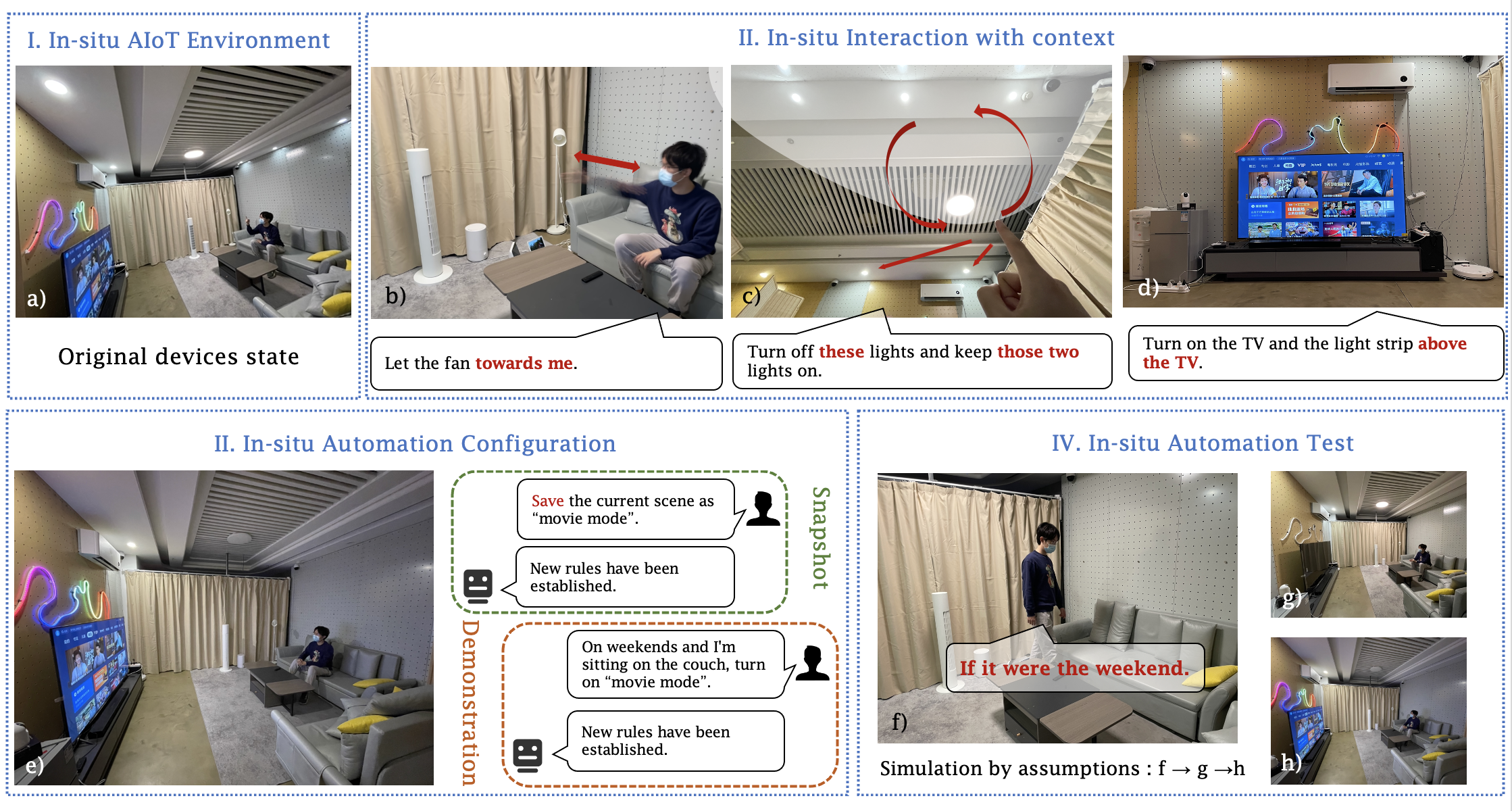

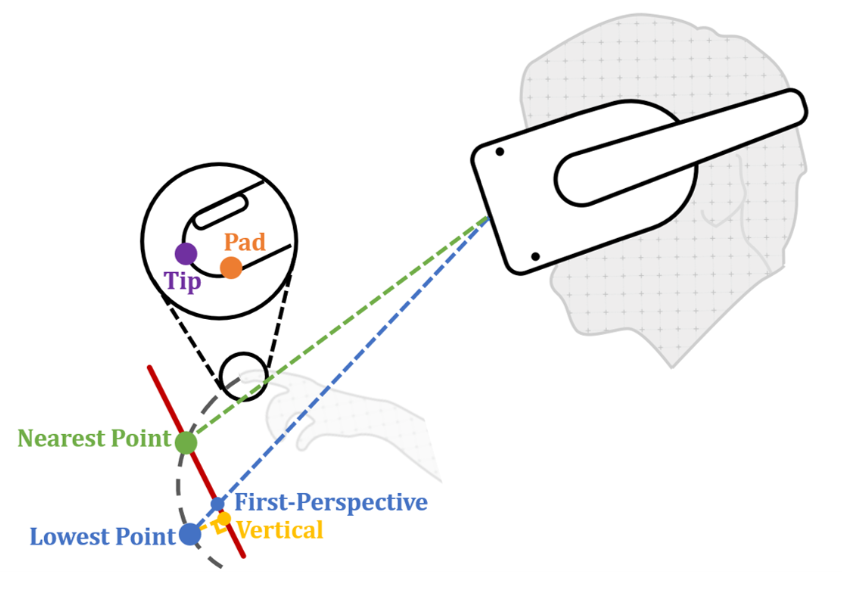

Hi! This is Chen, a computer science Ph.D. candidate from Pervasive Computing Group at Tsinghua University, supervised by Prof. Yuanchun Shi and Prof. Chun Yu. I received my Bachelor's degree in Computer Science from Tsinghua University in 2019. My major research direction is Human-Computer Interaction (HCI) and I am also keeping track of research updates on natural language processing and computer vision. My research interest focuses on facilitating natural and efficient interaction schemes with compact sensor form in XR and mobile scenarios by leveraging multi-modality sensing (e.g., vision, audio, inertial signal, and RF). My previous and ongoing work expanded the user's input capability both in spatial (e.g., enabling precise input on the subtle fingertip unintrusively) and temporal (e.g., enhancing the recognition of fast and transient gestures) domains. My goal is to develop fundamental interaction techniques, with which a user can interact with everything around them seamlessly, for the next generation XR interface (just like the mouse and the keyboard for GUI). Recent News: 1. Great pleasure to give a talk at HKUST(GZ). Thank Prof. Mingming Fan for the invitation. 2. One paper have been conditionally accepted by IMWUT (May 2023). Congratulations to my collaborators! 3. One paper has been conditionally accepted by UIST 2023 (pending minor revision). Congratulations to my collaborators! 4. Our paper "Enabling Voice-Accompanying Hand-to-Face Gesture Recognition with Cross-Device Sensing" has received the Honorable Mention Award of CHI 2023! Congratulations to Zisu! |

|

Honorable Mention Award)

Honorable Mention Award)